Running Your Own LLM From LLM Implementation: From Setup to Production.

Interactive Guide to LLM Implementation: From Setup to Production

1. Understanding Large Language Models (LLMs)

Objective: Learn what LLMs are and interact with a pre-trained model to understand their capabilities.

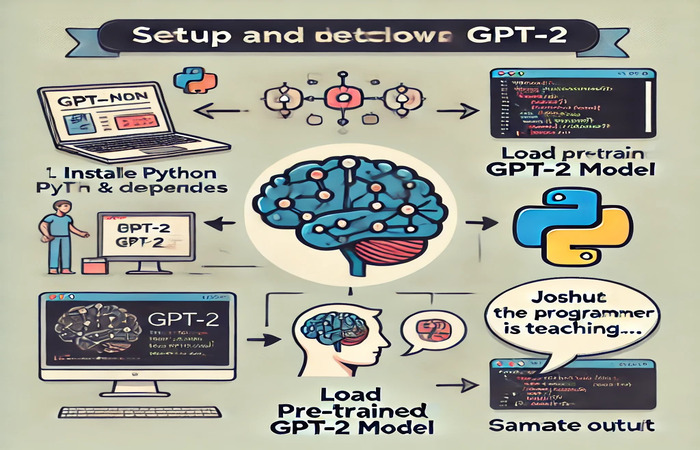

1. Environment Setup

Before you start working with Large Language Models (LLMs), you need to prepare your computer. Follow these steps:

- Install Python (≥3.8): Visit the Python Downloads page and install Python version 3.8 or higher.

- Install pip, the Python package manager: Open your command line and type:

python3 -m ensurepip --upgrade

- Set up a virtual environment: A virtual environment helps you keep your projects clean and organized. Run the following commands:

python3 -m venv llm-env

source llm-env/bin/activate

- Install Hugging Face Transformers: This tool helps you work with pre-trained LLMs. To install it, run:

pip install transformers

Hands-On Example:

Task: Use a pre-trained GPT-2 model to generate text.

Code:

from transformers import pipeline

# Load a text generation pipeline

generator = pipeline("text-generation", model="gpt2")

# Generate text

result = generator("Joshua The Programmer is teaching", max_length=50)

print(result)

Output: This will generate a continuation of the prompt "Joshua The Programmer is teaching," showcasing how LLMs predict and complete text.

Try It Yourself: Run the code and experiment with different prompts or parameters like max_length.

2. Build vs. Buy: Deploying an LLM

Objective: Learn how to fine-tune an LLM for a specific task and deploy it.

- Extend the above setup by installing datasets and accelerate:

pip install datasets accelerate

- Download a dataset from Hugging Face: The dataset will help the model learn new information. Run:

from datasets import load_dataset

dataset = load_dataset("yelp_polarity", split="train[:1%]")print(dataset[0])

Fine-Tuning GPT-2

Code: Save this as fine_tune.py

from transformers import GPT2Tokenizer, GPT2LMHeadModel, Trainer, TrainingArguments

tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

model = GPT2LMHeadModel.from_pretrained("gpt2")

# Tokenize dataset

def tokenize(batch):

return tokenizer(batch["text"], padding=True, truncation=True)

dataset = dataset.map(tokenize, batched=True)

dataset.set_format("torch", columns=["input_ids", "attention_mask"])

# Training

training_args = TrainingArguments(

output_dir="./results",

num_train_epochs=1,

per_device_train_batch_size=4,

save_steps=10_000,

save_total_limit=2,

)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=dataset,

)

trainer.train()

Run the Training: To train the model, type this in the command line:

python fine_tune.py

Deploy the Model:

- Save the fine-tuned model:

model.save_pretrained("./my-fine-tuned-model")tokenizer.save_pretrained("./my-fine-tuned-model") - Use Hugging Face’s inference API to deploy: First, install Gradio:

pip install gradio

Then, use the following code to create a simple web interface:import gradio as gr def generate_text(prompt): result = generator(prompt, max_length=50) return result[0]["generated_text"] demo = gr.Interface(fn=generate_text, inputs="text", outputs="text") demo.launch()

3. Overcoming Deployment Challenges

Objective: Optimize an LLM for production to reduce costs and latency.

Steps:

- Use Quantization: Quantization helps make the model smaller and faster.

First, install the optimum library:

pip install optimum

Then, optimize the model for inference:from transformers import AutoModelForCausalLM

from optimum.onnxruntime import ORTModelForCausalLM

model = AutoModelForCausalLM.from_pretrained("gpt2")optimized_model = ORTModelForCausalLM.from_pretrained(model)

optimized_model.save_pretrained("./optimized-model") - Containerize with Docker: Docker helps you package the model and run it in any environment. Create a Dockerfile:

FROM python:3.8-slim WORKDIR /app COPY . /app RUN pip install -r requirements.txt CMD ["python", "app.py"]Build and run the Docker container:docker build -t llm-app .

docker run -p 5000:5000 llm-app

4. Interactive Use Case: Industry Applications

E-Commerce Chatbot

- Install Flask:

pip install flask

- Create app.py: This code will create a simple chatbot that answers questions.

from flask import Flask, request, jsonify from transformers import pipeline app = Flask(__name__) generator = pipeline("text-generation", model="gpt2") @app.route("/generate", methods=["POST"]) def generate(): data = request.json prompt = data.get("prompt") result = generator(prompt, max_length=50) return jsonify(result) if __name__ == "__main__": app.run(host="0.0.0.0", port=5000) - Test the API: You can test your chatbot using the following command:

curl -X POST http://localhost:5000/generate -H "Content-Type: application/json" -d '{"prompt": "Welcome to Joshua The Programmer’s chatbot"}'

5. Security and Privacy

Objective: Secure the model and its data in production.

Steps:

- Use environment variables for sensitive keys: Never hard-code sensitive data like API keys.

export API_KEY="your-key-here"

Access it in Python:import os

api_key = os.getenv("API_KEY") - Encrypt sensitive data: Use encryption to protect sensitive information.

Install the cryptography library:

pip install cryptography

Use this code to encrypt and decrypt data:from cryptography.fernet import Fernet key = Fernet.generate_key() cipher = Fernet(key) encrypted = cipher.encrypt(b"My secret data") decrypted = cipher.decrypt(encrypted)

This hands-on guide teaches you how to fine-tune large language models (LLMs) to create blog posts and marketing content. You’ll set up your environment, train the model on a sample dataset, and deploy the model to generate engaging, SEO-optimized content. Perfect for content creators and marketers looking to automate repetitive tasks while maintaining quality.

Interactive Guide to LLM Implementation: From Setup to Production